UE_LOG(LogFileBio, Display, TEXT("A bit about me"));

Hello World! I am a human *definitely not a robot* and to prove it, I've attached a picture of my very real, totally non-metallic face. Welcome to my little patch of digital dirt! Here, you'll find an assortment of my past projects which, albeit, are not as refined and diverse as I would like them to be, are still a testament to my fanaticism for video games and technical aptitude. I am a driven individual who found himself listening to a lecture on life-long learning and took it to heart. Whether it's fine-tuning game mechanics, optimizing code architecture for maximum robustness, or organizing my digital life with a borderline-OCD precision, I'm always pushing for efficiency, balance, and mastery in my skills. If getting things done means diving into DevOps, Web Development, Computer Vision, AI or any other domain that wasn't originally on my radar—so be it. I learn what I need to, and I make it work. Oh, almost forgot to mention, I am also a computer engineer from NUST with a cgpa of 3.25 (Because grades matter too, right? ... Right??)

Developed as a freelance project using Scene Captures in Unreal Engine, this tool is designed to assist dentists in accurately placing dental caries on 3D teeth models. It features a user-friendly interface that allows dentists to ➸ Place teeth and carries ➸ Adjust their sizes and depth ➸ Hide and show them individually or collectively ➸ Visualize the results in 4 different viewing modes; BaseColor, Depth, Normal and Final Color The tool also includes a library of common caries types for quick access and options to import custom models at runtime using gltfRuntime. It also includes a plethora of customizable options that are saved to/loaded from disk and also the ability to save screenshots from any of the viewing modes to disk.

Built as a flexible foundation for third-person shooters, this project started as an experiment with Unreal's Game Animation Sample 🏃🏼💨 and quickly grew into a full-featured template for rapid prototyping ➸ Main menu and character selection with animated UI, camera transitions and SFX ➸ Three unique classes: Assassin, Gunner, Tank; each with distinct scale and health ➸ TDM map with three lanes, spawn areas, and bots that use perception, take cover and reload ➸ Full weapon arsenal: pistol, SMG, AR, LMG (with mobility restrictions), shotgun; each with unique damage, procedural character recoil, and firing modes (semi, burst, auto) and headshot multipliers ➸ ADS Integration into the animation blueprint with direction feedback and limiters for automatically turning in place in case the player starts firing behind themselves ➸ Melee combos with root-motion animations, hit reactions, and sound effects ➸ Shields with four recovery stages and visual feedback (SFX, VFX, UI) ➸ Refined gameplay systems: aim sensitivity, match timer, respawn, score tracking, and dynamic crosshair From animation tweaks to AI overhauls, every feature was built, tested, broken, and rebuilt—making this template a true playground for learning and iteration.

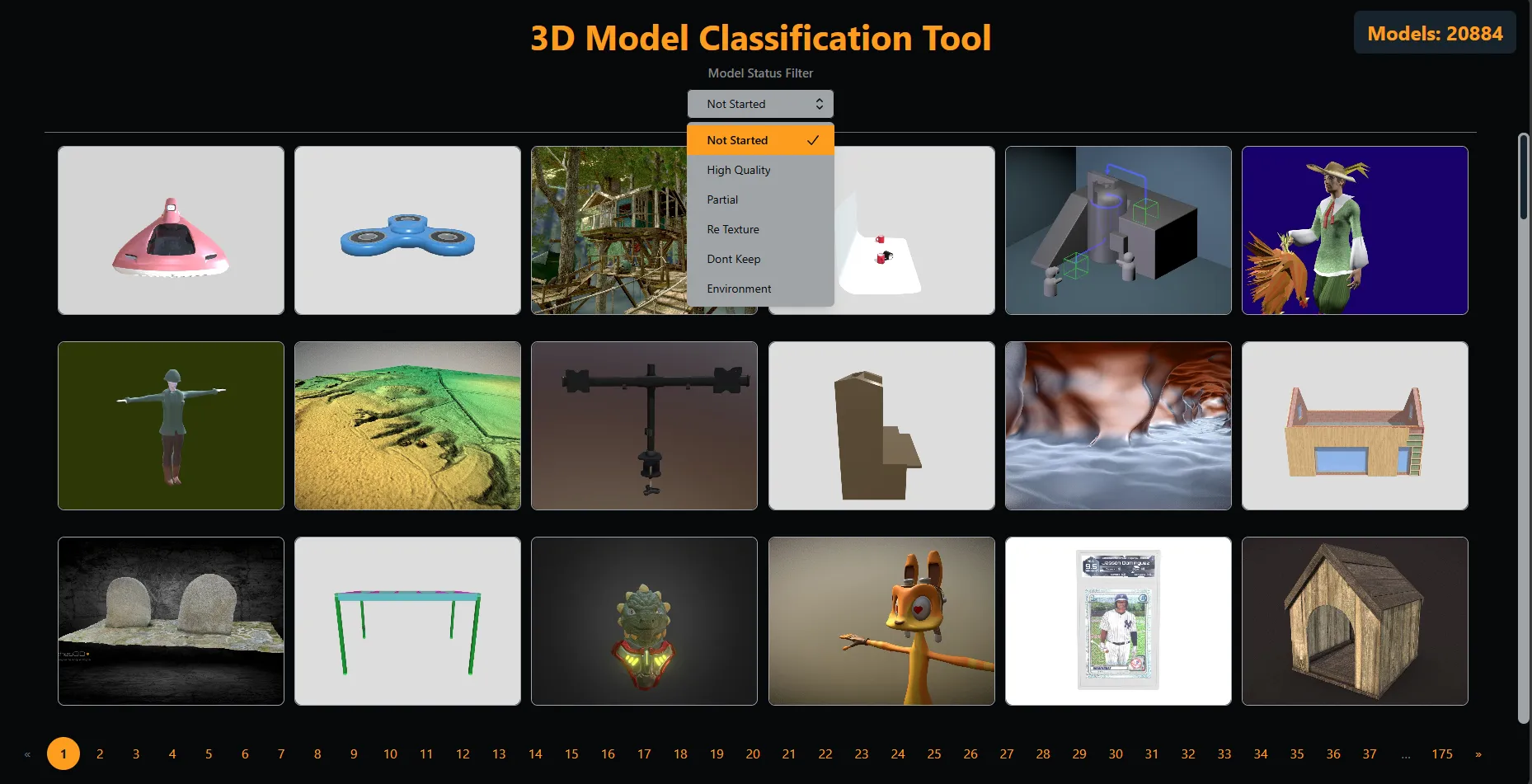

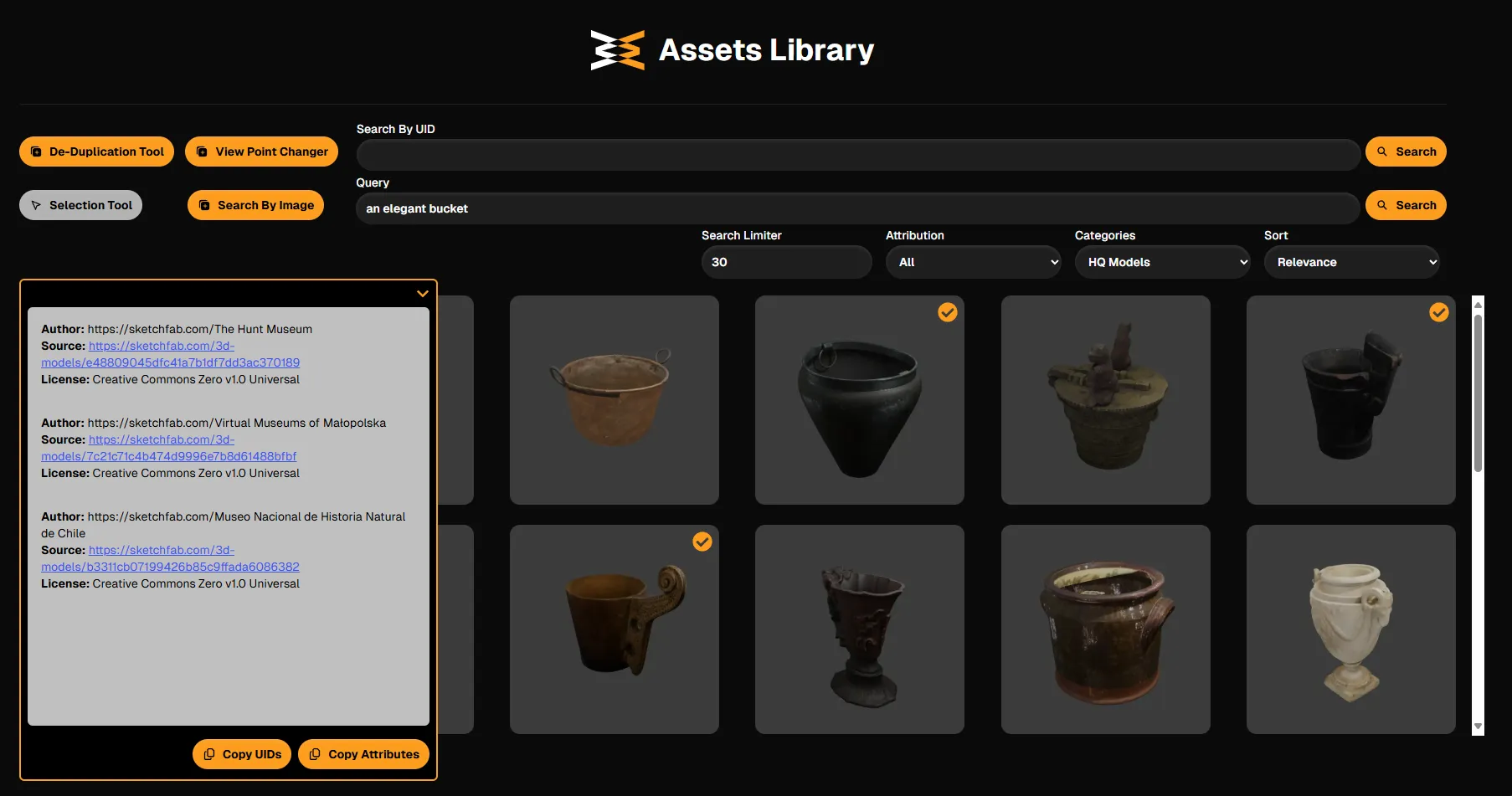

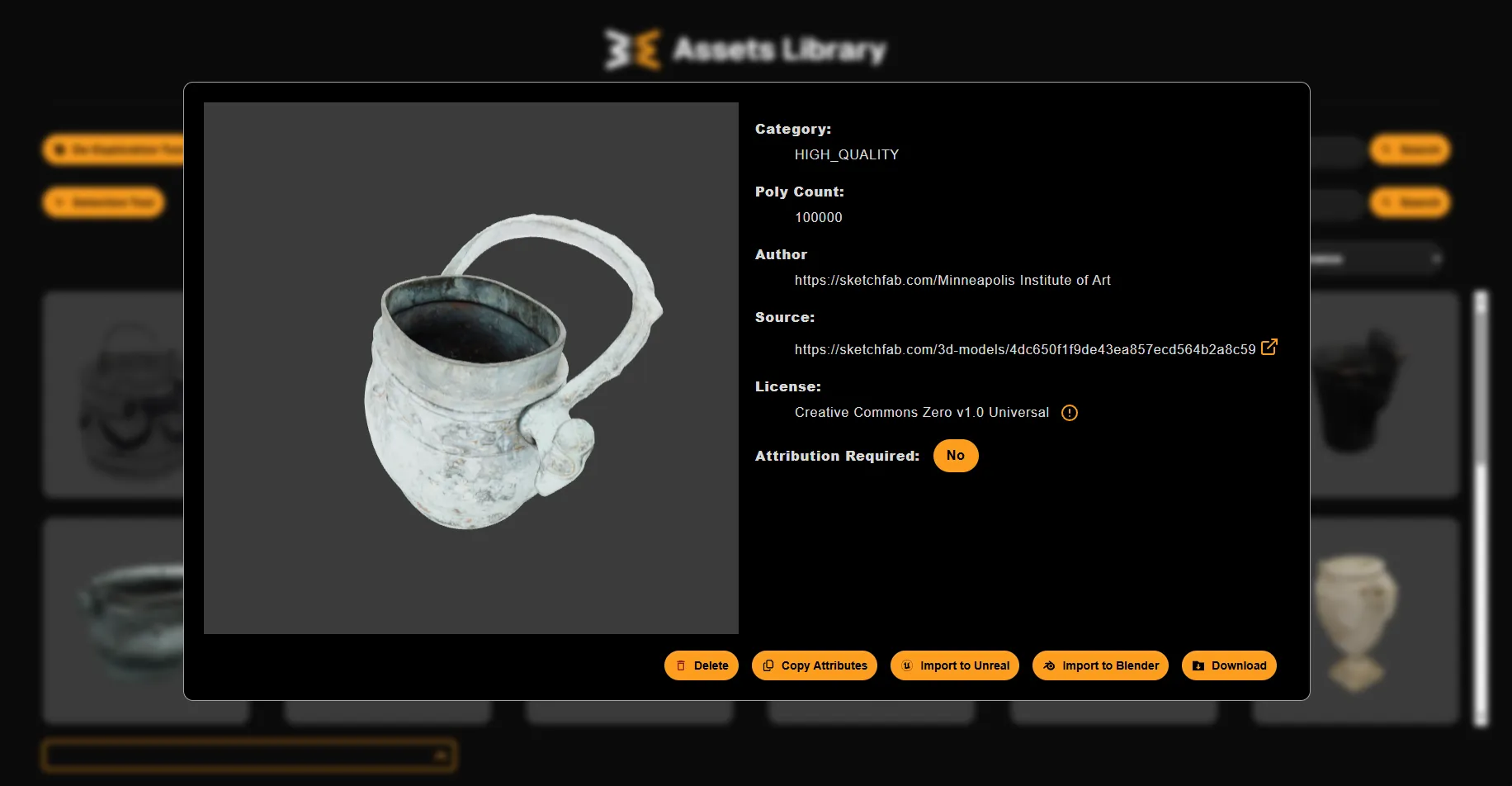

3D Models Digitial Asset Management Solution

Jan 2025

As part of our mission at Altroverse to build a fully automated pipeline that converts user prompts into photorealistic 3D scenes and then into synthetic datasets, we needed a high-quality asset library of 3D models. I started with Objaverse-XL, a dataset of 10 million 3D assets, but many were low-quality, stylized, or unsuitable for realism. I tested an aesthetic score predictor, but it failed to filter out non-photorealistic models, so I developed a more hands-on approach. To classify assets, I wrote a script using the Blender Python API that rendered each 3D model from all 4 angles and another script to clean and align them by unparenting, centering models, merging meshes, and repositioning origins to the bottom centers for proper ground up scaling. Working with Sualeh, we built a web-based classification tool using PostgreSQL, sorting assets into High-Quality Models, Partial Models, Environments, and Don't Keep categories. Even after alignment, the models lacked real-world scale. To solve this, I used a Vision-Language Model (VLM) to generate captions for each model and an LLM to estimate dimensions based on that caption. To find the optimal architecture, I benchmarked multiple VLM-LLM pipelines, testing their ability to approximate correct scales with the least error. Instead of traditional category-based asset retrieval, I designed a CLIP-powered vector search. By storing a single rendered image embedding of each model in a vector database, users can now query assets using natural language, just like google image search, embedding their search text into the same vector space for semantic retrieval without predefined categories. To make the asset library accessible to the team, I built a Flask backend to serve both renders and assets, handling query processing, asset retrieval, database management and frontend delivery from the cloud storage bucket, while Sualeh developed the React-based WebUI. This asset library is now a core part of our growing scene generation pipeline, enabling dynamic asset spawning from text prompts with unprecedented flexibility

In this cutting-edge project at Altroverse, I harnessed the power of Unreal Engine to generate a photorealistic synthetic dataset with precise annotations for training CNN models. Synthetic data annotation also offers a huge advantage—it's far more efficient than manually labeling real-world images. For this dataset, I focused on 3 key types of annotations: ➸ Depth Maps - Teaching AI to understand distances in a scene ➸ Semantic Segmentation - Differentiating categories of objects with an image ➸ Instance Segmentation - Distinguishing individual objects within categories To achieve highly realistic synthetic data, we utilized scene captures and post-process materials for the annotations. This pushed the boundaries of photorealism, ensuring the dataset closely mirrored real-world conditions. Since seamless collaboration was essential, I also set up Perforce version control across all office PCs, making teamwork smoother and eliminating versioning chaos. This project was an exciting fusion of gaming technology and AI research, reinforcing that Unreal Engine isn't just for games! 🚀

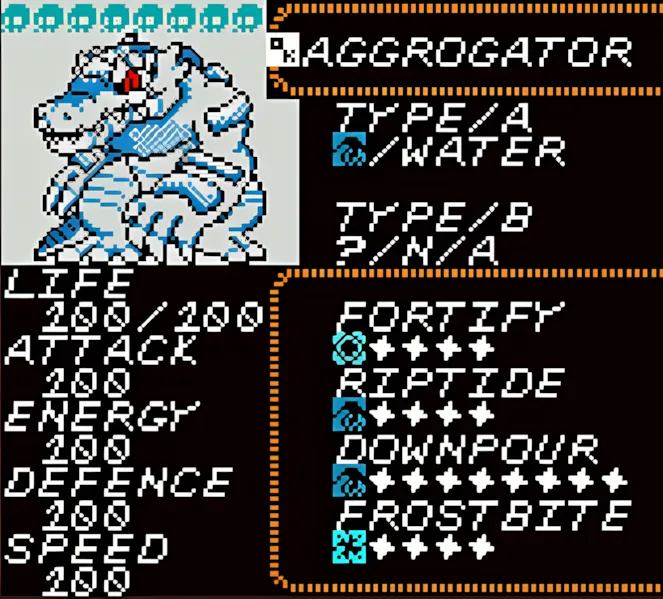

While lurking on Discord, I met Mari, an insanely talented art student, who was trying to bring the charm of classic Pokémon games (think Gold, Silver, and Crystal) to life, and as someone who played through those gems at least 5 times, I knew I had to be a part of it. At its core, Teramancers is a retro-inspired monster-taming RPG with top-down pixel art and turn-based battles, but it's not just another Pokémon clone. What makes it different? ➸ 🎨 The Art & The Vision - The world and monster designs? All Mari. The programming? That's where I stepped in ➸ ⚔️ 4v4 battles - Teramancers has a unique twist on turn-based combat where the player fights alongside their monsters (Teraforms), and instead of each character having separate turns, all abilities draw from a shared pool of ➸ 🎯 Action Points (AP)—forcing players to strategize across their whole team. Once both teams lock in their actions, every Teraform and player takes their turn from fastest to slowest, leading to a highly tactical, dynamic battle flow ➸ 🛠 The Tech Journey - I started by building an MVP in Unity, but pixel-perfect scaling was a nightmare. So, I took the plunge and switched to GameMaker, learning the engine from scratch to get the crisp retro aesthetic just right. The prototype started looking amazing, but life got busy, and I had to put the project on pause.

Ever heard of ApocZ? If you had an Xbox 360 and couldn't play DayZ (because it was PS-exclusive), then you might remember this janky-yet-charming open-world survival game. It had bad graphics, clunky gameplay, and somehow... an amazing community. Years later, a group of nostalgic players gathered on Discord, reminiscing about the good old days. That's when I thought—why not bring it back? With zero existing assets or documentation, I set out to rebuild ApocZ from scratch in Unreal Engine 5, reverse-engineering gameplay footage from the depths of YouTube. In the process, I leveled up my skills in: ➸ 🎮 Multiplayer Blueprinting for seamless PvPvE action ➸ 🌍 Procedural Terrain Generation to recreate that vast, abandoned island ➸ 🎨 Material Graphs & Animations to push UE5's graphical fidelity ➸ 🕺 Animation Blueprints & Montages for immersive character movement I started with a multiplayer FPS locomotion system that turned out great. Then came the weapons and inventory system. Combining logic, skeletal mesh sockets, animations, and UI turned into a monster of a task. As I struggled with blueprint organization, I realized I needed C++ for better structure and performance. Instead of hacking together messy workarounds, I made the tough call to scrap and restart the project. But with life getting busy, it's on pause—for now.

Enter Edenfell, a post-apocalyptic high-fantasy warzone where magic, strategy, and skill collide! This isn't your typical MMO or MOBA—it's a brand-new genre: DAMS-RPG (Dynamic Arena Multiplayer Strategy RPG). Here, personalized avatars, intense combat, and strategic gameplay create a battlefield that's always evolving. Working with a fully remote team of Programmers, 2D & 3D Artists, Animators and Musicians spanning multiple countries, I contributed to key gameplay systems, including: ➸ 🤖 Enemy AI - Dynamic attack modes, Tracking, and Combat behaviors ➸ 🌍 Multiplayer Lobby - Teaming system, seamless connections ➸ 🕹️ Player Movement & Combat - Fluid 3rd Person controls, Networked extensible animations ➸ 🏹 Weapons & Attack Combos - Melee/Ranged mechanics, Chaining attacks This project was a crash course in networked gameplay. I dove deep into Unity NGO (Netcode for GameObjects) and multiplayer topologies, learning how to build responsive, low-latency online combat distributing the logic between the server and the clients. With its huge scope and ambitious mechanics, Edenfell is still in development. While I had to step away due to other commitments, the team is pushing forward, and this game is shaping up to be something truly special. Keep an eye on it—this might just be the next big thing. 🚀

Tired of big tech locking your media behind paywalls and cloud subscriptions? Image-Search is my open-source solution to that problem—a Google Photos-like experience but for local media on your desktop, built for privacy-conscious photographers, media studios, and power users who want full control over their files. Here's the key features: ➸ 📌 Smart Tagging - Manually label files or let AI do the work with facial recognition & image captioning ➸ 🔍 Advanced Search - Instantly find images based on people, objects, or descriptions ➸ 💾 Local-First Approach - No cloud, no subscriptions, just you and your data Built using PyQt6 for the UI and SQLite for storage, this was my final year project, and it earned me an A grade (GPA: 4.0/4.0) 🎉

During Winter Game Jam 2023 by MLABS, my friends Mansoor, Shaheer, Fatima, and I—along with Sameer, a senior dev from Tintash—set out to create a successor to Dungeon Survivor in just two weeks. With FYP presentations and tight deadlines, we faced plenty of challenges, especially with communication and time constraints. Despite the hurdles, we still managed to create a decent game with character, and it became a major learning experience. The Game Premise: An adventurer accidentally enters a forbidden dungeon and must solve timed puzzles to keep the torches lit and avoid being consumed by deadly beasts. Though we didn't make it past the selection phase for CPI testing, this project was a reminder that not everything I build will turn to gold, but each experience is a step forward in growth, teamwork, and adaptability. I worked on: ➸ 🎮 Game Design & Project Management ➸ 🗺️ Level Design & Team Integration ➸ 🕹️ Grid Movement, Doors & Switches, UI

This project was part of a larger team effort at RISETech, where I built a 3D Model Viewer in Unity for a medical mobile and web app designed to assist doctors in clinical practice. Using a spherical coordinate system, I mapped touchscreen gestures to handle rotation, zoom, and pan functions seamlessly. Beyond navigation, we implemented: ➸ 🩺 An annotation system using raycasts for precise note-taking on 3D models ➸ 🔍 An outlining system by dividing the mesh of patient models into segments for better visual clarity The 3D models were developed by Mansoor, while I focused on the Unity integration. To bridge Unity with Flutter, I developed an API with over 30 functions, handling everything from camera controls to annotation management. Each function was rigorously tested inside Unity, ensuring smooth two-way communication between the Flutter frontend and Unity backend. This project was an interesting mix of game dev, UI/UX design, and medical tech—a reminder that good engineering isn't about sticking to one domain but about making things work, no matter the field.

Shape Race

Sep 2022

Shape Race was my ambitious attempt at launching a game on the Play Store and experimenting with the process. Did it make it there? Nope. But did I learn a ton along the way? Absolutely. At its core, Shape Race is a fast-paced arcade game where the speed ramps up the longer you play. Combine that with some hard turns and you've got a recipe for adrenaline-fueled chaos. Staying vigilant and reacting quickly is the only way to chase those high scores—and trust me, it gets intense. While working on this game, I dove headfirst into splines, object pooling, post-process effects, and crafting engaging player experiences. Each feature pushed my skills further, and though the game didn't hit the Play Store, it definitely left a mark on me.

Ever wanted to spice up your game with slick combo counters and satisfying text popups? Well, I did—so I built a small but mighty Unity plugin that does just that! This arcade-style combo system lets you integrate multi-phase combo counters with optional text popups to give players instant feedback (because who doesn't love seeing flashy numbers go up?). For smooth animations, it plays nicely with both DOTween and LeanTween—the two heavyweights of Unity tweening. At one point, I considered writing my own tweening library to ditch external dependencies... but then I remembered scope creep is real, and this was meant to be a simple tool, not a full-blown engine rewrite. Priorities, right? 😅

VRM Batch Exporter Unity Tool

Dec 2021

When a startup needed to automate the creation of NFT 3D avatars in VRM format, they ran into a problem—there weren't many tools out there for the job. So, they reached out to me, and I whipped up a handy little script (with a UI, so let's call it semi-fancy). At its core, it's a simple for-loop that reads any texture file from the resources folder and applies it to a rigged 3D avatar—either individually or in bulk. But hey, just because it's simple doesn't mean it isn't effective! The UI lets you tweak settings, making the whole process smoother and a lot more user-friendly. Since VRM is a niche format, this was a fun challenge to figure out. If you want to see it in action, check out the video for a live demo of the generated avatars!

During the final four weeks of my internship at MLABS, I took part in an intense game jam with over 300 participants—and what a wild ride it was! 🚀 From late-night brainstorming sessions to rapid prototyping, my team and I poured everything into bringing our idea to life. I naturally stepped into the role of group leader, working alongside my incredibly talented teammates, Aiyan and Hajra. Thanks to our collective effort, we built our game swiftly and effectively, balancing creativity with execution. The submissions were judged on design and implementation (50%) and a marketing test (50%), where each game's Cost Per Install (CPI) was measured using a $150 ad budget. Not only did our game excel in both categories, but we also won the competition, earning the "Rookies of the Year" title! 🏆 It was an unforgettable experience that reinforced my love for game development, teamwork, and making ideas come to life under pressure. My contributions included: ➸ 🎮 Game Design & Project Management ➸ 🎨 Level Design & Hand Animations ➸ ⚙️ Event Systems, Player & NPC Controllers

I built this game from scratch! (well, aside from the textures, models, and music) as a semester project for my Computer Graphics course. It was during the COVID lockdown, so naturally, I spent an entire month doing nothing but working on this game—because what else was there to do? 😅 My goal was to recreate the movement mechanics from an old classic, Wonderland: Secret Worlds. I started with Unity's physics system—colliders, rigidbodies, the whole deal—only to quickly realize physics had other plans. The movement was unpredictable, janky, and just plain wrong. So, I did what any determined (or slightly stubborn) developer would do—I scrapped everything and rebuilt it from the ground up using C# arrays. Looking back, my code was... well, let's just say it violated several coding standards and possibly a few laws of nature. But this project was a major stepping stone in my journey. It taught me problem-solving, iteration, and the art of knowing when to throw your code in the trash and start over. And for that, I'm still proud of it.